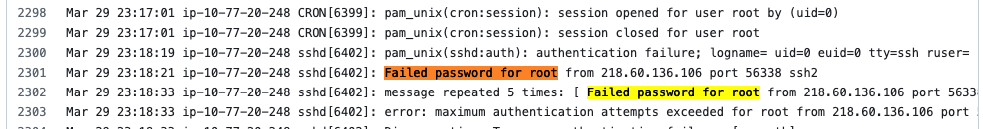

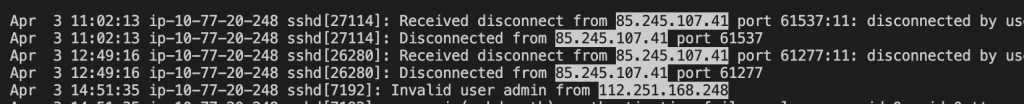

Using (e)grep, cut, awk, sed to extract specific information in Linux logs is one of the daily tasks of Linux system administrator.

grep "Failed" '/var/log/auth.log' | grep -v root | awk -F 'from ' '{ print $2} ' | awk '{print $1}' | sort | uniq -c | sort -nr | while read COUNT IPHowever, in this article, I want to demonstrate how to use PowerShell to extract the number of failed root login in Linux server.

Download the /var/log/auth.log example file via github (https://github.com/elastic/examples/blob/master/Machine%20Learning/Security%20Analytics%20Recipes/suspicious_login_activity/data/auth.log)

Save it under Downloads directory. Querying the content of the file via Get-content command.

$inputpath = get-content "/Users/tungle/Downloads/auth.log"Now, I want to explain how to use PowerShell to extract specific information in the log file. If the number of failed attempts is greater than the LIMIT, then it will display count number, IP address, and Geolocation of the IP address.

Firstly, we need to know a format of the IP address via PowerShell regex.

$regex = "\b\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}\b"Filtering all lines are matched the regex format of the IP address in auth.log file.

$output = $inputpath | Select-String -Pattern $regex -AllMatches

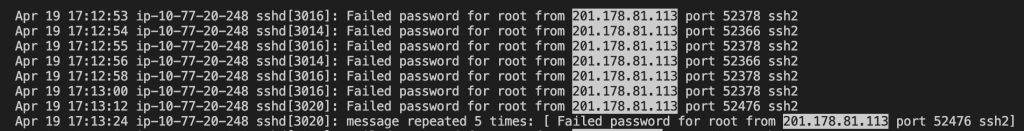

Selecting all lines are matched “failed password for root”.

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root"

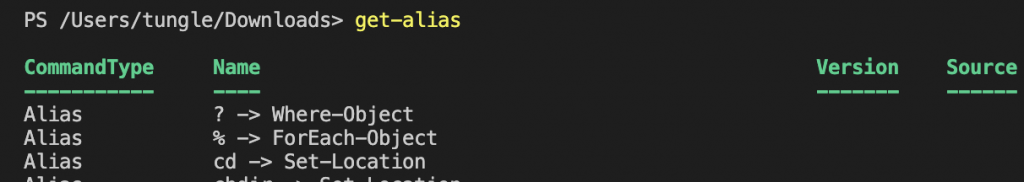

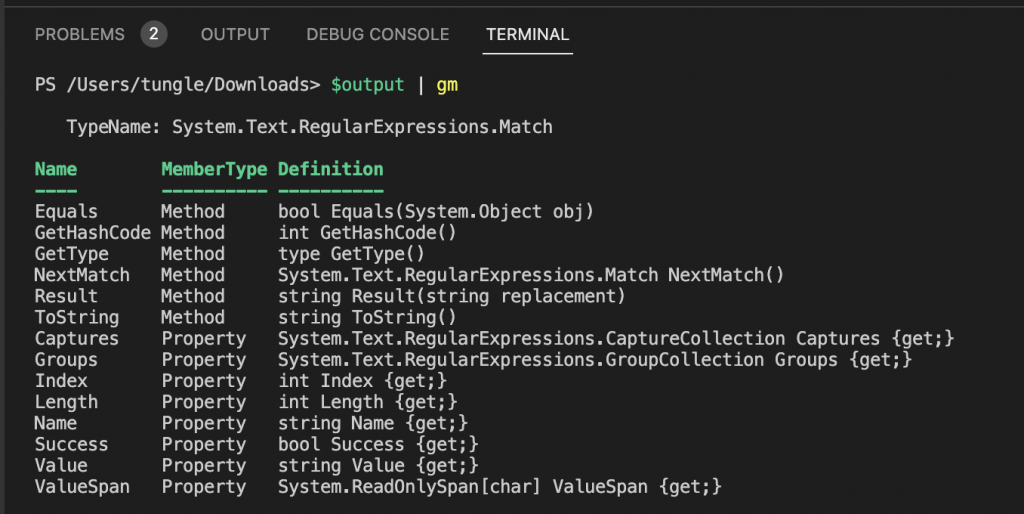

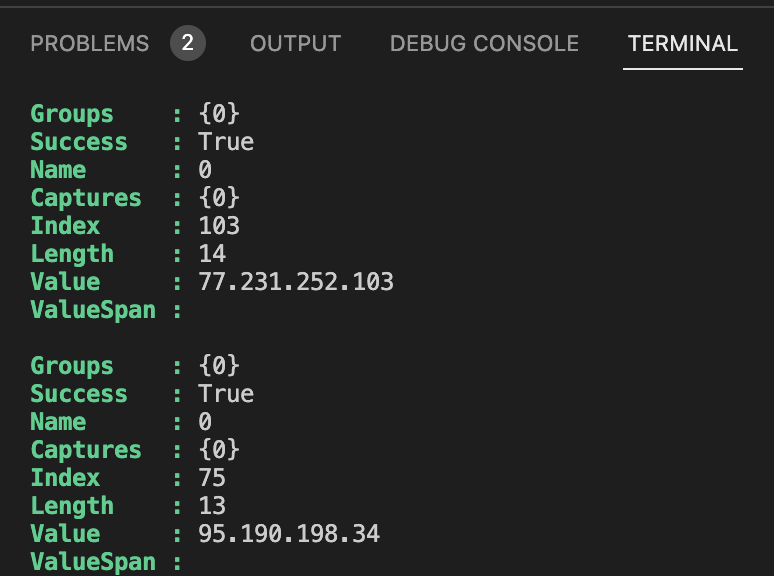

Following the MS link, I use matches method to populate the returned MatchCollection object (https://learn.microsoft.com/en-us/dotnet/api/system.text.regularexpressions.regex.matches?view=netframework-4.7.2). % is an alias of foreach-object.

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches }

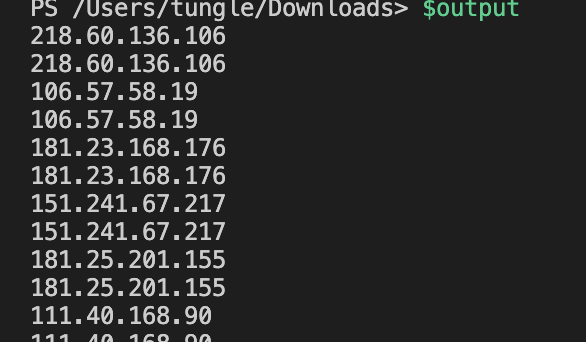

Next, getting IP addresses of the failed login in the log file.

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches } | % { $_.Value }

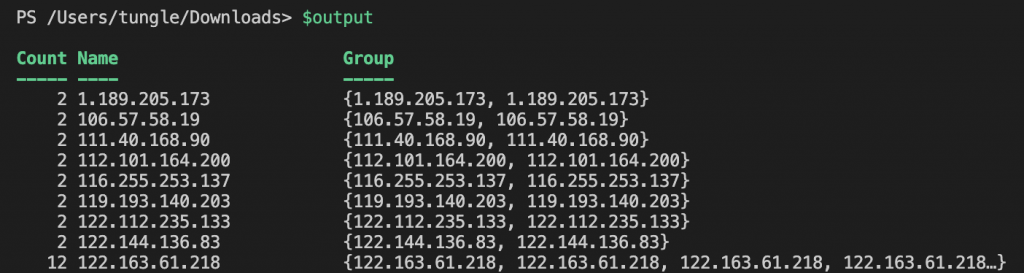

Group the IP address property together.

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches } | % { $_.Value } `

| Group-Object

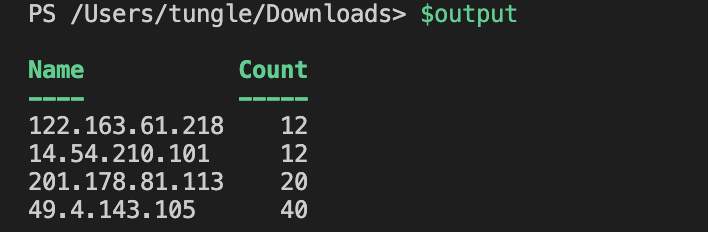

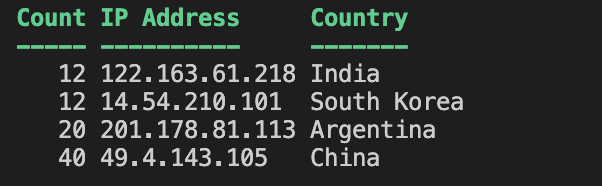

Check if the number of failed attempts is greater than the LIMIT (>10 failed login attempts).

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches } | % { $_.Value } `

| Group-Object | Select-Object Name, Count | Where-Object { $_.Count -gt 10 }

Using the PS script block below to detect attempted IP address, count, and country. Basically, the script will check all lines in the $output variable above and output the top IP address and county attempts.

ForEach($line in $output){

$IP = $line.Name

#$IP

# Query Geolocaltion of the IP addresses via free API

$result = Invoke-RestMethod -Method Get -Uri http://ip-api.com/json/$IP

# Create a PSCustomObject to save Count, IP address and Country attempts

$myObject = [PSCustomObject]@{

Count = $line.Count

"IP Address" = $line.Name

#IP = $result.query

Country = $result.Country

}

$myObject

}

Below is a PS script block to check the failed root attempts.

$inputpath = get-content "/Users/tungle/Downloads/auth.log"

#$regex = ‘\b\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}\b’

$regex = "\b\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}\b"

#$inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root"

#$inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches }

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches } | % { $_.Value } `

| Group-Object | Select-Object Name, Count | Where-Object { $_.Count -gt 10 }

ForEach($line in $output){

$IP = $line.Name

#$IP

$result = Invoke-RestMethod -Method Get -Uri http://ip-api.com/json/$IP

# Create a PSCustomObject to save Count, IP address and Country attempts

$myObject = [PSCustomObject]@{

Count = $line.Count

"IP Address" = $line.Name

#IP = $result.query

Country = $result.Country

}

$myObject

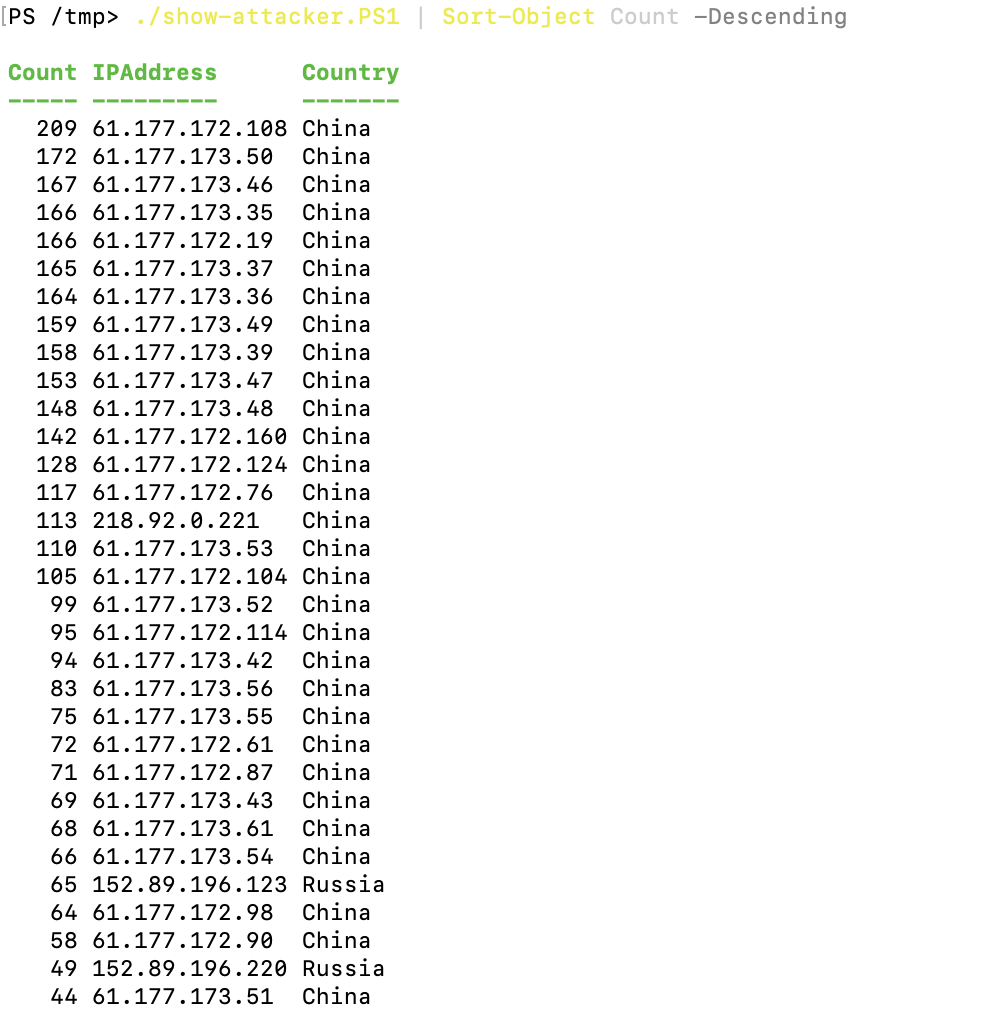

}This is a PS script (show-attacker.PS1) to run in a cloud-based Linux virtual machine.

$inputpath = get-content "/var/log/auth.log"

#$inputpath = get-content "/Users/tungle/Downloads/auth.log.txt"

$regex = "\b\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}\b"

$output = $inputpath | Select-String -Pattern $regex -AllMatches | Select-String "failed password for root" | % { $_.Matches } | % { $_.Value } `

| Group-Object | Select-Object Name, Count | Where-Object {$_.Count -gt 20}

ForEach($line in $output){

$IP = $line.Name

$result = Invoke-RestMethod -Method Get -Uri http://ip-api.com/json/$IP

# Sleep a second

Start-Sleep 1

#Write-Output $_count

$myObject = [PSCustomObject]@{

Count = $line.Count

"IPAddress" = $line.Name

#IP = $result.query

Country = $result.Country

}

$myObject

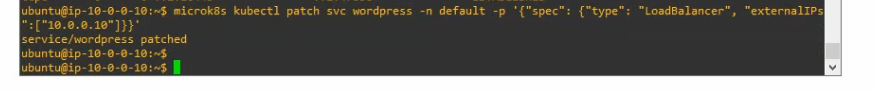

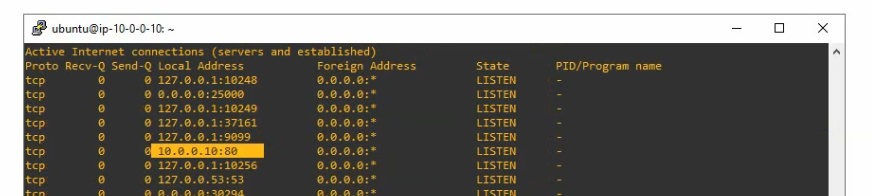

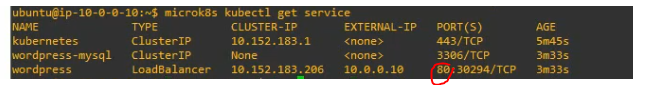

}Run the script in Linux server.

./show-attacker.PS1 | Sort-Object Count -Descending

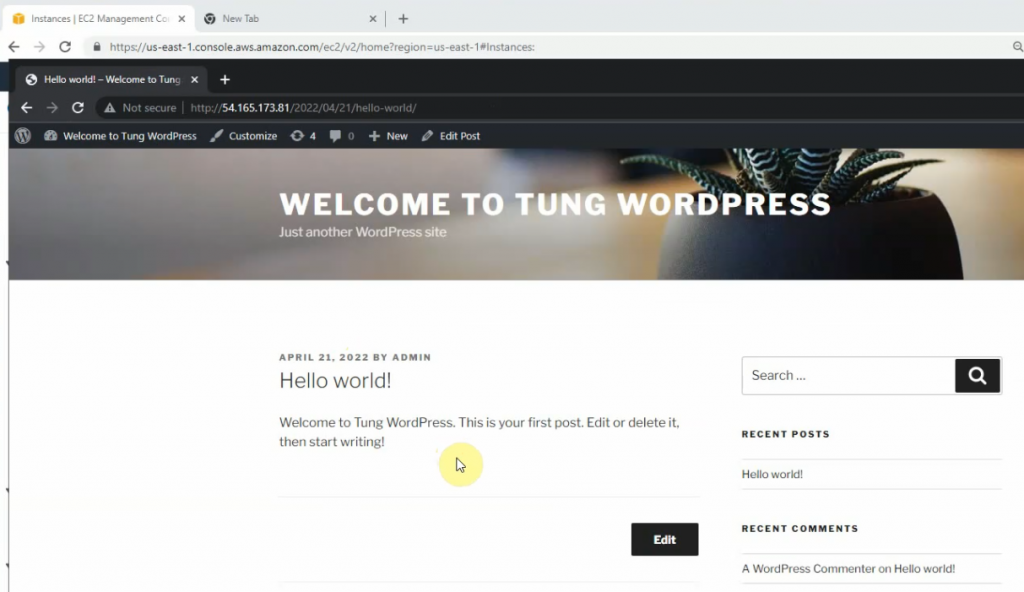

Finally, we can extract specific information of authentication logs in Linux server by using PowerShell.